Below post is a continuation of a series dedicated to webshells. In the first part we presented a short introduction to webshells, explaining what they are and what are the most common installation vectors on victim machines. Second presented a real life intrusion scenario where webshells played a major role. In the third part we introduced defence strategies and tested webshell detection tools.

We are back again with webshell topic as last blog post was warmly welcomed by our readers. At the beginning, I would like to say - sorry for the delay! We received a few messages asking for a continuation of this series. So, here we are, even such a long time in the IT world did not devalue our subject which seems to be still hot, referring to latest web trends or social media discussion. In this blog post I decided to perform more structured tests of several publicly available webshell detection tools.

Test details - sources

Some time ago Recorded Future published a great writeup on webshells. Two key takeaways were points discussing high popularity of webshells amongst Chinese criminals and continual development of new samples. It came as no surprise that a large number of samples in my data set seemed to be of Chinese origin.

I used the following, well known webshell repositories to create my own, testing superset:

- https://github.com/bartblaze/PHP-backdoors

- https://github.com/JohnTroony/php-webshells

- https://github.com/BDLeet/public-shell

- https://github.com/tennc/webshell

- http://www.irongeek.com/i.php?page=webshells-and-rfis

- https://github.com/epinna/weevely3

- https://github.com/wireghoul/htshells

A few comments are needed here. First three repos are a great collection of webshells, mostly written in PHP. I needed to clean-up the tennc repository a little bit by eliminating webshells that I was not interested in, and removing unrelated files like images, readme files etc. Stuff from irongeek.com was something that I found accidentally but I really liked it and decided to include five most recently added files in this research. Weevely (version 3.4) is a well-known webshell generation framework, that is also part of Kali Linux. As webshell agent code is polymorphic, I decided to generate fifty different samples to ensure good test coverage. Last, but not least, htshells had its own 15 minutes of fame about 3-4 years ago, although old-fashioned, it is still relevant nowadays. Just think, when was the last time you saw AllowOverride ALL? There are still many admins looking for advice how to turn it on. Thanks Tomi for bringing this to my attention!

Moving forward, when I was collecting samples I focused on specific file formats - the ones that are most popular and prevalent in the wild - ASP.net, JSP, PHP. Some of the files had .TXT extension or contained webshell code disguised as a JPG or GIF file. Moreover, I did not remove ColdFusion webshells. For shits and giggles, I left it as a non-popular type of webshell to see how tools would react. Some of them still can be spotted in the wild from time to time (1,2).

Overall size of entire collection was more than 1k files. Due to overlaps I needed to deduplicate some files by comparing their hashes, so please relax - no fail here:)

Test details - methodology

The overall detection rate was a primary objective for this test. This coefficient was a simple ratio of detected webshells against entire collection. Webshell detection variety (obfuscated/not obfuscated, programming languages, miscellaneous formats) was a second factor. Next on the list was a false positive ratio and final factor of this test - speed. For tools running under Linux/*nix, I used time command in order to measure the elapsed time. Speed was only measured for scenarios where full data set was used. Exception was OMENS which is a Windows tool, so it was tested on a different VM.

All above factors were a criteria for tools evaluation. Of course, I am aware that each tool is different and works in a distinct manner, but in the end all of them have the same objective - detect webshells. And that is exactly what was tested. I wanted to find the best tool in two categories: Overall detection with the smallest false positive ratio Overall PHP webshell detection with the smallest false positive ratio

At this point it is necessary to mention that for some tools documentation indicated that only specific file formats are supported. As a result I needed to create multiple tailored subsets of my initial data set. PHP webshells are the most popular type based on formats nowadays, hence majority of the webshell detection tools supports it. That is a reason for me to test how successful tools are on that field.

All tools were tested in the exact same way. Data sets were uploaded to server and then each tool was fired against them with default ruleset in the following scenarios:

- Shell Detector

- All sources (1107)

- Only PHP webshells (560)

- LOKI

- All sources (1107)

- Only PHP webshells (560)

- PHP-malware-finder

- All sources (1107)

- Only PHP webshells (560)

- PHP webshells + PHP valid files (560 + 2480)

- OMENS

- All sources (1107)

- All sources + valid files (1107 + 4187)

NOTE: “valid files” represents the same collection of random files used in part 3.

Without a further ado, I hope all is clear now and we can start reviewing test results!

Shell Detector

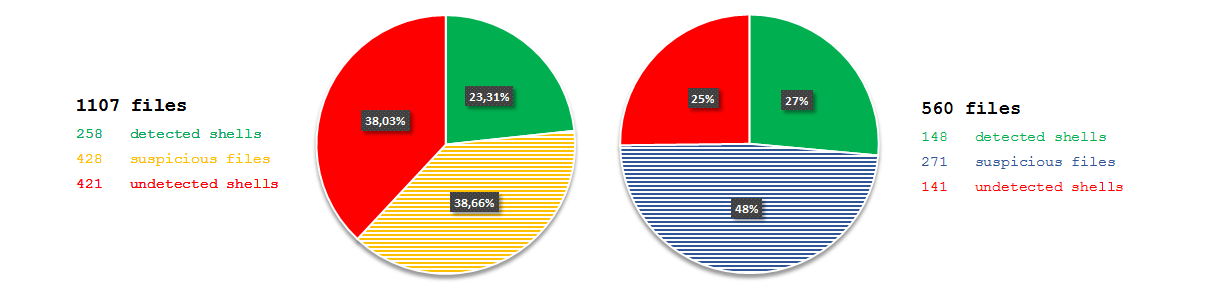

I started with our old friend, Shell Detector. As you may remember, last time it was not our contest winner, but I wanted to test it as it is still being mentioned in many webshell detection writeups.

Shell Detector marks files as suspicious and webshells - it is worth to mention that webshells are also marked as suspicious files (double tag)! I focused only on webshells tag - suspicious was too wide (many false positives) as it was presented last time.

And what were the results? Only 1 out of every 5 files was recognized. Even if I tested only PHP/ASP files, it stayed on the same level of detection. The execution time of a process also was not satisfying - 5 minutes and 16 seconds.

LOKI

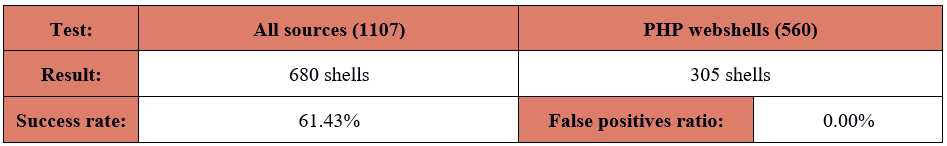

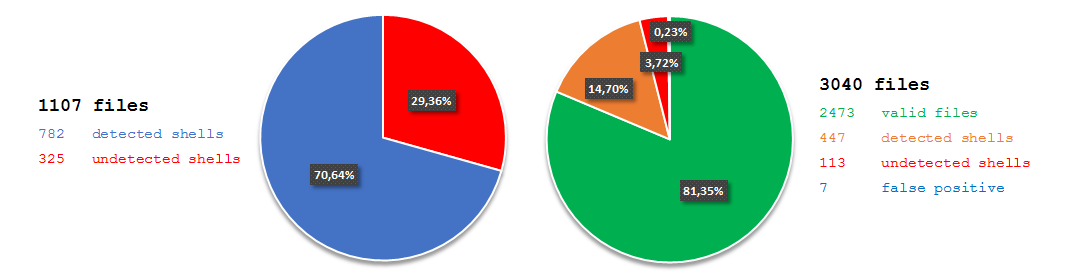

Second tool in our test should be also familiar to you. LOKI did very well in the previous part of this series and I expected good results also this time. Not wasting your precious time, let’s jump to test results:

Honestly, I was surprised that it did not perform so well as I expected. Detection ratio around 60% is NOT a bad score but I was hoping for a much better results based on the previous test. Not this time. Please remember that I only used default rulesets provided by each tool.

Analysis of the results gave me a few interesting observations. First of all, LOKI did not detect htshells. The absence of detection of these webshells was caused by lack of relevant rules. The situation was a little bit different for Weevely backdoor agents. None of the fifty agents were detected despite the existence of a dedicated rule in the thor-webshell.yar ruleset. I must mention here that this rule was created back in 2014 and it’s no longer applicable to Weevely 3.x PHP agents (it works just fine for older versions, e.g. 1.1). Additionally, it had a problem with the PHP files from the caidao-shell repository which includes different types of China Chopper webshells and clients. Last but not least, I observed that LOKI had problems with obfuscated files. It was easily observed in results for PHP-backdoors repository where I noticed only a few hits.

Another part of tests was a false positive ratio. Once again I can say that LOKI was able to overcome this challenge. I tested it twice with two different groups of valid files, both attempts were successful as I did not get any false positives.

Execution time - 39 seconds - was the best out of all tested tools.

PHP-malware-finder

And now, I would like to warmly welcome a newcomer to our series - PHP-malware-finder! I learned about it from its authors on Twitter - thank you very much!

@dfir_it Hello! We read your articles about Webshell and wondered if you would like to test our detection tool: https://t.co/xCM57EiRGx

— nbs_system (@nbs_system) August 23, 2016

It goes without saying, I was happy to test it. Although, before I move to test results I would like to briefly introduce you to this tool. From Github page:

Detection is performed by crawling the filesystem and testing files against a set of YARA rules. Yes, it’s that simple!.

YARA plus effective rules sounded like a good recipe for decent results in our tests. In addition, authors mentioned a few features which can increase detection of obfuscated files. It all gave me hope for a high percentage of detection.

How does it look like when executed? The user is presented with a simple output without too many details explaining reason for detection - just short information which YARA rules fired (eg. DodgyPhp) or if file was suspiciously short (TooShort):

1 2 3 4 5 6 7 8 9 10 11 | |

I noticed a few things that might be noteworthy:

- Verbose mode. It executes YARA with “-s” option (print matching strings). Output is not formated and, as a result, not very readable.

- Fast mode. Again it uses another YARA functionality (-f, fast scan). Fast mode stops searching for strings when they were already found. In fast mode you won’t see all occurrences of the string in YARA’s output when using verbose mode, just the first one will appear. In our test, it was not game changer - scan took only one second less.

- Results. There appears to be a small thing when printing system paths. As you can see on listing above I added a slash symbol (/) at the end of the path where all webshells were uploaded. That slash sign was replicated at last once in some of the results. This is a result of the method in which YARA combines strings. It generated stealthy updates when I used sort and uniq tools. This might also affect your workflow if you plan to integrate this tool with alerting systems (e.g. SIEM). Be careful!

1 2 | |

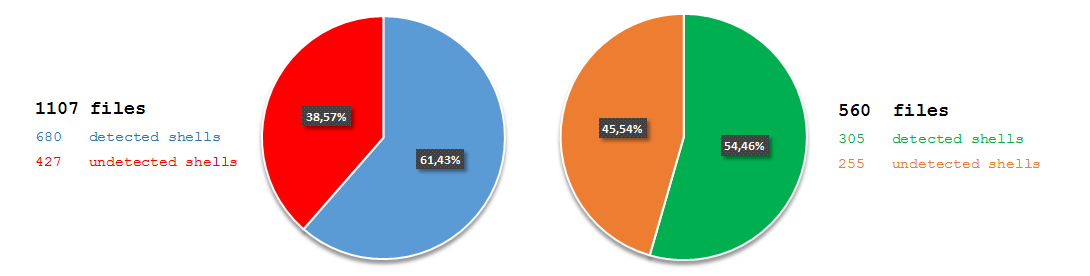

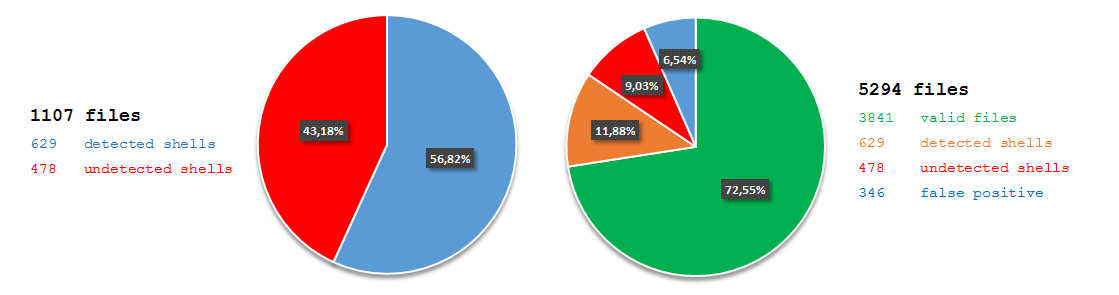

Let’s move on to our test and check the PHP-malware-finder!

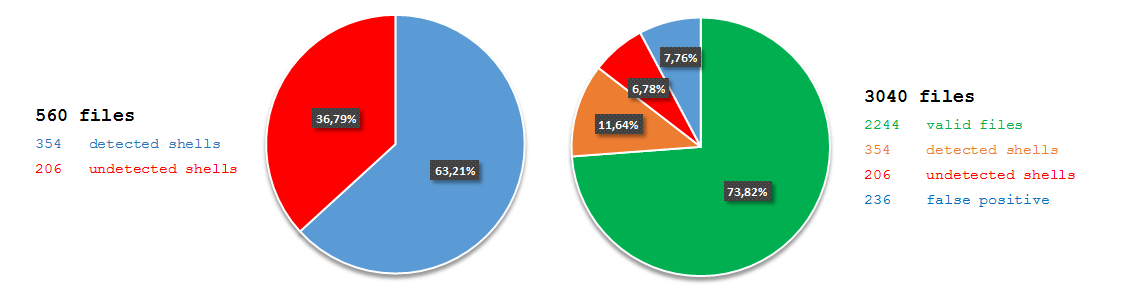

As you can observe, PHP-malware-finder(PMF) achieved better results than LOKI. I need to mention here that to perform full test with PMF I had to execute it twice and select language (-l switch) to either PHP or ASP. The reason for that was the way PMF process rules. When used with -l switch, tool process suspected file with php.yar or asp.yar respectively. First rule in both files is a global private rule and checks whether the file format is compatible with the user choice. If not, processing of the file stops at this point, it is because of the way how global rules work. Ultimately, I merged the outputs to receive final result.

Regarding the result from PHP testset, I was super glad - big WOW! Almost 80% looked really good. There was space for improvement, but that was something I considered as a really promising foundation for further development. Much better than LOKI with default YARA ruleset. One more thing was false positive rate level. It was extremely low and could be tolerant in production.

I expected execution time to be close to LOKI as detection methods of both tools are similar. As it sometimes happens, reality does not always meet expectations. PHP-malware-finder, when executed without any additional switches, needed 1 minute and 41 seconds to complete the scan

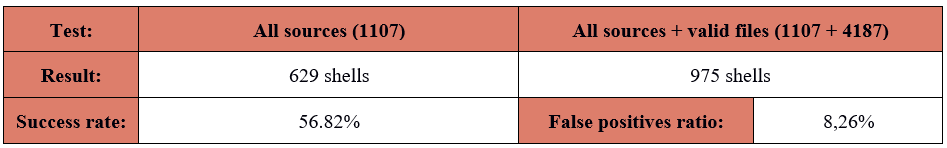

OMENS

Next debut here! Yet again I learned about this tool from Twitter (I love social media!).

@lennyzeltser @maridegrazia @dfir_it if you get a chance checkout *OMENS*. I'm kinda partial to it ;)https://t.co/dMlbWYyPei

— Quix0te (@OMENScan) July 8, 2016

Of course, I tested it with pleasure!

OMENS is free and closed source. Author explains reason for this decision. Even though I am personally a fan of open source tools, I can think of various reasons for making such choice and I respect that. For more information about this tool I recommend you to read official documentation.

I am happy to see a dedicated tool for Windows OS, as most of the available tools focus on *nix systems. Output looks pretty nice. It contains detailed information about each hit, including full path to an affected file plus information which files were added since the last scan. Below is a sample output:

1 2 3 4 5 6 7 8 9 10 | |

Another handy feature allows to generate a result file named BadHTML.log. It lists all files marked as suspicious and can be easily used as input to other programs/devices for further analysis or to block traffic.

Final results from test:

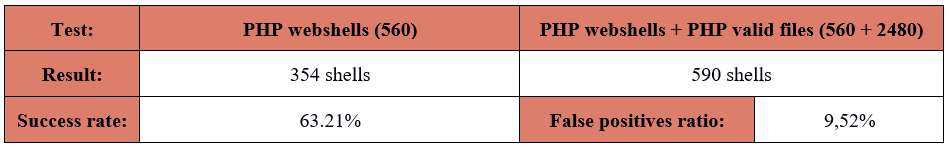

Detection ratio from all sources was similar to YARA based tools - 56%. Problem appeared with high false positive rates - more than 8%. Documentation doesn’t provide any information about limitation of supported file formats, so high FPs ratio likely was not an effect of badly composed testing set. Unfortunately, this feature can cause a lot of hassle for people tasked with reviewing alerts. According to our goals, I performed one additional test with only PHP files:

The results were similar to first test. Good detection rate but high level of false positives - 9,52%. In my opinion it is not feasible to maintain a production tool with so high false positive ratio. I would recommend this tool if it was possible to easily edit signatures which would allow me to tuning these generating a large amount of FPs and creating new ones to enhance the level of detection. Unfortunately, OMENS in its current shape does not allow these kind of changes.

As it was told at the beginning of this post, I did not test speed of scanning for that tool.

“Better Together”

The subtitle of this part is not accidental. (I named it after Jack Johnson’s song). Right after my tests were completed, I started thinking how to improve overall detection score to be higher than 90%. Two projects use YARA so naturally, I decided to combine databases of both tools and see if it is going to help.

At the beginning, I compared test results from both tools to see how many detected webshells were not visible by another. The output files from LOKI and PHP-malware-finder were formatted to contain only sorted full paths of matched files. Next I searched for the differences between two files:

1 2 | |

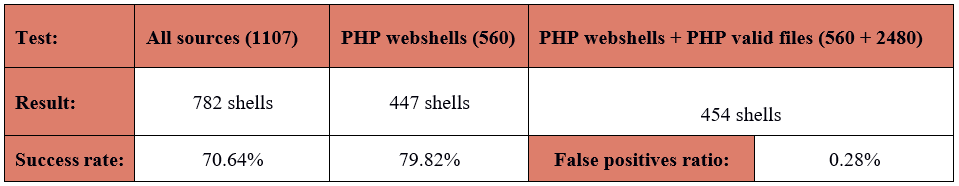

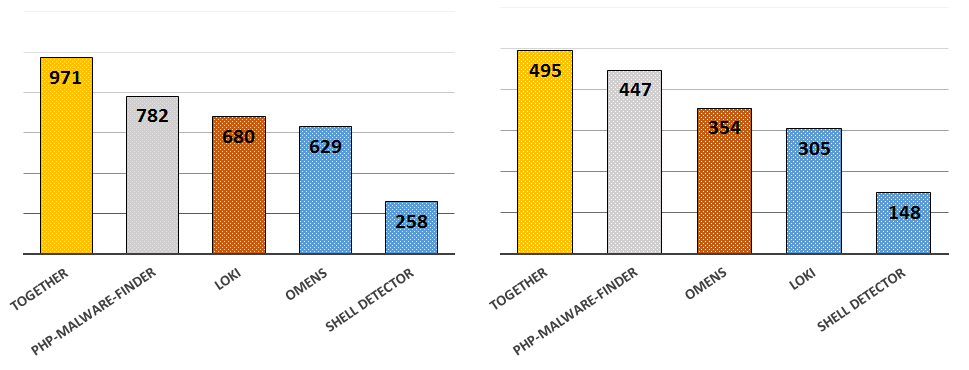

As soon as I saw result that operation I just wanted to leave for my favourite bar and get a glass of something good. You may asked me why I was so happy. Short answer to that question is a very long list of differences between both files. When I merged the results I got 82.2% detection rate (910 webshells detected). Huge improvement achieved. It was not perfect but it still left me with some tricks up my sleeve to increase the overall ratio by adding new YARA rules.

Let’s put it all together in one tool. I decided to use LOKI mostly because of scanning speed and logging enabled by default. I copied .yar files to LOKI’s signature-base/yara/ directory and started testing. As you can imagine it is never that simple. Story of my life. It was not different this time. Both tools use YARA but are build in a different way so there were a few changes that I needed to apply:

- I removed whitelist checks - pretty nice feature but I decided it would be too big hassle to move it to LOKI

- One of the rules in the file bad_php.yar contained a small error in regular expression. I corrected the Misc rule by adding a closing bracket:

$chmod = /chmod\s*(.*777)/ - I needed to remove two global private rules from asp.yar and php.yar. Both were affecting scanning of files that did not match PHP or ASP characteristics and thus impacting final results.

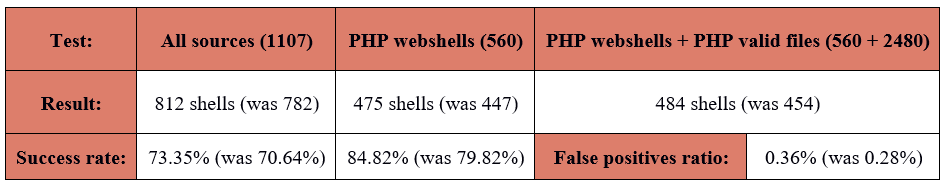

And now is the best part, after resolving problems from above list, our detection ratio increased! 968 shells and 87.44% accuracy achieved by LOKI. I tested also PHP-malware-finder with last two changes and I noticed much better results than before:

These results were really good, but still it was not our last word!

Do you remember a ruleset with modification from last part of this series ? Yes, now it was the time to play that card. I did not expect too much, because actual detection ratio was high, but I was not disappointed and I got another three matches, so at that point I had 971 findings (495 findings for PHP files - 88.39%).

Naturally, main question came to my mind: “What files are still not cover ?!”

Time to answer that question. I had a list of 136 files not detected. Statistical analysis of the results based on formats showed that most undetected extension was PHP - 65 matches, but it worth to mention that if we consider proportion of tested files to undetected files also based on format then ASP will be on first place - 43 out of 141 files, gives 30,5% undetected ratio. Moreover, TXT files (7 matches in total - 3 PHP, 2 ASP and 2 JSP content inside), one example of ColdFusion and htaccess shell (I was positively surprised!) were still undetected. Additionally, I examined relation between obfuscated and non-obfuscated PHP files (including three hiding behind TXT extension) from collection of undetected files. The graph below present the results:

A little deeper look at results gave me a few observations what was not detected. Take a closer look at details:

- many Unknown shells from PHP-backdoors repo (mostly obfuscated)

- three variants of MailerShell (also PHP-backdoors)

- a few files related to caidao-shell (tennc/webshell repo)

- a few files from collection of drag library scripts (tennc/webshell/drag repo)

- a few WAF bypass files (bypass-waf-$date format from tennc/webshell/php repo)

I would like to go into more details but it is material for different article where I could analyse step by step why these files were undetectable. However, I hope that all above analysis will be a good source for developers tested tools.

Conclusion

One sentence to sum up all the above. Do not give up, my blue team friends! Though none of the tested tools achieved 100% success rate, everybody knows and agrees that detection tools cannot be the only layer in your defence strategy. It is something, I have touched upon in the last part of this series. Even though, in this post I have highlighted limitations and weaknesses of some tools, my hope is to not discourage anyone from developing and improving community toolset. It is a continual process and learning from each other hopefully helps to make the difference, at the end of the day. Positive result of this research is the fact that by sharing experience and combining work of people from different companies, backgrounds and projects, we can bring tangible benefits to all of us.

Gold medal goes to COOPERATION! As always - keep fighting! Keep defending!

PS. If you write YARA rules for your own use, consider sharing with community and submit them to YaraRules Project.